What is Meant by AI Model Security?

Introduction to AI Model Security

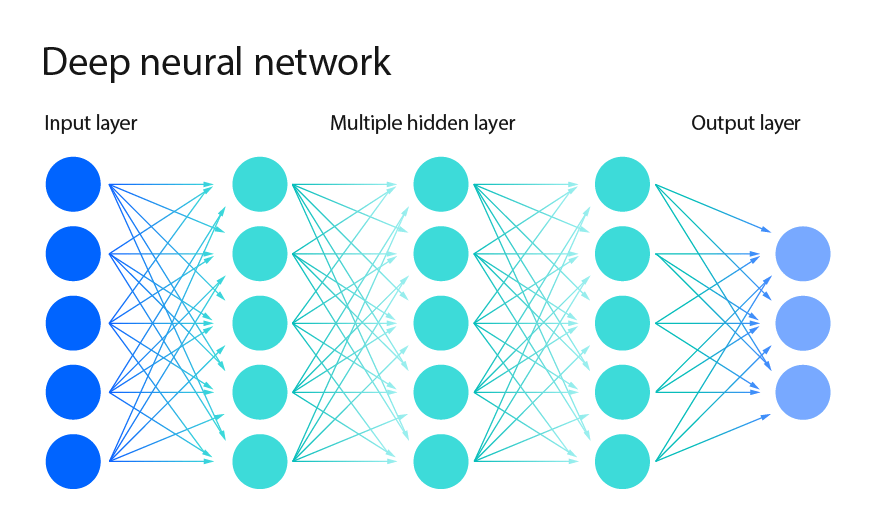

In the age of artificial intelligence, securing AI models has become a paramount concern for businesses, governments, and individuals. AI model security refers to the practices, strategies, and technologies used to protect AI models from a variety of threats, including adversarial attacks, data poisoning, model theft, and unauthorized access. As AI systems are increasingly deployed across various sectors, ensuring the integrity, confidentiality, and reliability of these models is critical for maintaining trust and preventing misuse.

Why AI Model Security is Crucial

The growing reliance on AI across industries such as finance, healthcare, autonomous vehicles, and cybersecurity highlights the importance of AI model security. A compromised AI model can lead to catastrophic outcomes, from incorrect medical diagnoses to financial fraud and compromised security systems. Therefore, securing AI models is not just about protecting intellectual property but also about safeguarding the broader societal functions that these models serve.

Key Threats to AI Models

1. Adversarial Attacks

Adversarial attacks involve the manipulation of input data to trick AI models into making incorrect predictions or classifications. These attacks can be subtle, such as altering a few pixels in an image to mislead a model into misidentifying it. Such vulnerabilities can be exploited in critical applications like facial recognition or autonomous driving, leading to dangerous outcomes.

2. Data Poisoning

Data poisoning occurs when malicious actors introduce corrupt or biased data into the training dataset of an AI model. This can lead to skewed or inaccurate predictions, undermining the model’s reliability. In sectors like finance, where AI models are used for fraud detection, data poisoning can be particularly damaging.

3. Model Inversion Attacks

Model inversion attacks aim to extract sensitive information from a trained AI model. For instance, an attacker might use the model to recreate parts of the training data, such as reconstructing an individual’s image from a facial recognition model. This poses significant privacy risks, especially in applications involving personal data.

4. Model Theft

Model theft involves the unauthorized copying or reverse engineering of an AI model. Competitors or malicious actors may attempt to steal a well-trained model to use it for their own purposes, bypassing the significant investment made in its development. This not only results in financial loss but also undermines competitive advantages.

Techniques for AI Model Security

1. Adversarial Training

One of the most effective methods for securing AI models against adversarial attacks is adversarial training. This technique involves exposing the model to adversarial examples during the training phase so that it learns to recognize and resist them. By doing so, the model becomes more robust against potential manipulations.

2. Differential Privacy

Differential privacy is a technique used to ensure that AI models do not inadvertently leak sensitive information about individuals in the training data. This is achieved by adding noise to the data or the model’s predictions, making it difficult to extract specific details while preserving overall accuracy.

3. Encryption and Secure Multi-Party Computation

Encryption techniques can be used to protect AI models during both training and deployment. Secure multi-party computation (SMPC) allows multiple parties to collaboratively train an AI model without revealing their individual data. This is particularly useful in scenarios where data privacy is a concern, such as in healthcare or finance.

4. Model Watermarking

Model watermarking is a technique used to protect intellectual property by embedding a unique identifier or watermark into the AI model. This watermark can be used to prove ownership and detect unauthorized copies of the model. Watermarking helps companies safeguard their investments in AI research and development.

Challenges in AI Model Security

1. Balancing Security and Performance

One of the main challenges in AI model security is finding the right balance between security and performance. Techniques like encryption and differential privacy can add computational overhead, potentially slowing down the model’s performance. Therefore, it is crucial to implement security measures that do not compromise the efficiency and accuracy of the AI system.

2. Evolving Threats

AI security is a constantly evolving field, with new threats emerging as AI technologies advance. Attackers are continuously developing more sophisticated methods to compromise AI models, making it essential for security strategies to be adaptive and forward-looking.

3. Regulatory and Ethical Considerations

As AI technologies become more widespread, regulatory bodies are beginning to focus on AI security standards and practices. Ensuring compliance with these regulations while maintaining the flexibility to innovate poses a significant challenge for organizations. Additionally, ethical considerations such as fairness, transparency, and accountability must be integrated into AI security strategies.

What Are Generative AI Models?

Introduction to Generative AI Models

Generative AI models are a class of artificial intelligence that can create new data or content by learning patterns from existing data. Unlike traditional AI models, which are primarily used for tasks such as classification, regression, or decision-making, generative models are designed to generate new, original outputs. These models have gained significant attention in recent years for their ability to produce realistic images, text, music, and even entire virtual environments.

How Generative AI Models Work

Generative AI models work by learning the underlying distribution of the training data and then generating new samples that are similar to the original data. There are several types of generative models, each with its own unique approach to generating data.

1. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are one of the most popular types of generative models. A GAN consists of two neural networks: a generator and a discriminator. The generator creates new data samples, while the discriminator evaluates the authenticity of these samples. The two networks are trained simultaneously, with the generator trying to fool the discriminator and the discriminator trying to detect fake samples. This adversarial process continues until the generator produces highly realistic data.

2. Variational Autoencoders (VAEs)

Variational Autoencoders (VAEs) are another type of generative model that uses a different approach. VAEs encode input data into a lower-dimensional latent space and then decode it back into the original space. During this process, the model learns to generate new data by sampling from the latent space. VAEs are particularly useful for generating continuous data, such as images and audio.

3. Transformer-Based Models

Transformer-based models, such as GPT-3 and BERT, are widely used in natural language processing (NLP) for generative tasks. These models use attention mechanisms to understand and generate text. They can be fine-tuned for specific tasks, such as text completion, translation, and content creation. Transformer-based models have revolutionized the field of NLP by enabling the generation of human-like text with remarkable accuracy.

Applications of Generative AI Models

Generative AI models have a wide range of applications across various industries, from entertainment and healthcare to finance and cybersecurity. Some of the most notable applications include:

1. Content Creation

Generative AI models are increasingly used in content creation, including writing articles, generating marketing copy, and creating visual content. These models can produce high-quality, human-like content at scale, making them valuable tools for businesses looking to automate and optimize their content production processes.

2. Art and Design

In the art and design world, generative AI models are being used to create original artworks, design products, and even generate entire virtual environments. Artists and designers are leveraging these models to explore new creative possibilities and push the boundaries of traditional art forms.

3. Drug Discovery

In the pharmaceutical industry, generative AI models are playing a crucial role in drug discovery. These models can generate new molecular structures that have the potential to become effective drugs. By exploring vast chemical spaces, generative AI models help researchers identify promising drug candidates more quickly and efficiently.

4. Personalized Recommendations

Generative AI models are also used in recommendation systems, where they generate personalized suggestions for users based on their preferences and behavior. For example, streaming services like Netflix and Spotify use generative models to recommend movies, TV shows, and music that users are likely to enjoy.

5. Cybersecurity

In cybersecurity, generative AI models are employed to simulate and predict potential cyber threats. By generating realistic attack scenarios, these models help security teams anticipate and defend against emerging threats. Additionally, generative models can be used to create synthetic data for training and testing security systems.

Challenges and Ethical Considerations of Generative AI

While generative AI models offer significant potential, they also present challenges and ethical considerations that must be addressed.

1. Misinformation and Deepfakes

One of the most pressing concerns with generative AI models is the potential for misuse, particularly in the creation of deepfakes and misinformation. Deepfakes are AI-generated videos or images that depict realistic but false scenarios, often used to deceive or manipulate the public. The ease with which generative models can create convincing deepfakes raises concerns about their impact on trust, privacy, and security.

2. Bias and Fairness

Generative AI models are only as good as the data they are trained on. If the training data contains biases, the generated outputs may also reflect those biases, leading to unfair or discriminatory outcomes. Ensuring fairness in generative AI models is crucial, especially in applications that impact decision-making in areas like hiring, lending, and law enforcement.

3. Intellectual Property and Ownership

The question of intellectual property and ownership is another challenge in the realm of generative AI. When AI models create new content, such as artwork or music, it raises questions about who owns the rights to the generated content. This issue becomes more complex when the AI model is trained on existing copyrighted material.

4. Privacy Concerns

Generative AI models that use personal data to create new content may inadvertently expose sensitive information. For example, a model trained on medical records could generate synthetic data that still reveals details about real patients. Protecting privacy while leveraging the power of generative AI is a critical challenge that must be addressed.

Conclusion

AI model security and generative AI models represent two critical aspects of the rapidly evolving field of artificial intelligence. Ensuring the security of AI models is essential to protect against a wide range of threats, while generative AI models offer exciting possibilities for innovation across various industries. As AI continues to advance, it is crucial to address the challenges and ethical considerations associated with these technologies, ensuring that they are used responsibly and for the greater good. The future of AI holds tremendous potential, and with the right safeguards in place, it can lead to transformative changes in how we live and work.

Leave a comment